1 Introduction

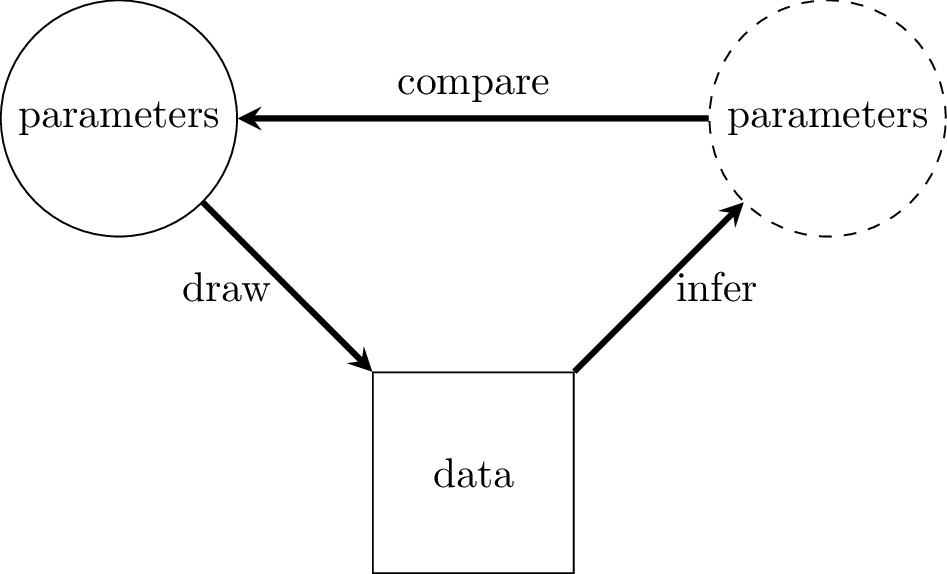

Simulation studies can be used to assess the performance of a model. The basic idea is to generate some parameter values, use these parameters to generate some data, use the data to try to infer the original parameter values, and then see how close the inferred parameter values are to the actual parameter values.

Figure 1.1: Simulation study of a model

Function report_sim() automates the process of doing a simulation study. We are still experimenting with report_sim(), and the interface may change. Suggestions are welcome, ideally through raising an issue here.

2 Estimation model matches data generation model

The most straightforward type of simulation is when the estimation model' used to do the inference matches thedata generation model’ used to create the data. Even when the estimation model matches the data generation model, the inferred values for the parameters will not exactly reproduce the actual values, since data is drawn at random, and provides a noisy signal about parameters it was generated from. However, if the experiment is repeated many times, with a different randomly-drawn dataset each time, the errors should more or less average out at zero, 50% credible intervals should contain the true values close to 50% of the time, and 95% credible intervals should contain the true values close to 95% of the time.

To illustrate, we use investigate the performance of a model of divorce rates in New Zealand. We reduce the number of ages and time periods to speed up the calculations.

library(bage)

#> Loading required package: rvec

#>

#> Attaching package: 'rvec'

#> The following objects are masked from 'package:stats':

#>

#> sd, var

#> The following object is masked from 'package:base':

#>

#> rank

library(dplyr, warn.conflicts = FALSE)

library(poputils)

divorces_small <- nzl_divorces |>

filter(age_upper(age) < 40,

time >= 2016) |>

droplevels()

mod <- mod_pois(divorces ~ age * sex + time,

data = divorces_small,

exposure = population)

mod

#>

#> ------ Unfitted Poisson model ------

#>

#> divorces ~ age * sex + time

#>

#> exposure = population

#>

#> term prior along n_par n_par_free

#> (Intercept) NFix() - 1 1

#> age RW() age 4 4

#> sex NFix() - 2 2

#> time RW() time 6 6

#> age:sex RW() age 8 8

#>

#> disp: mean = 1

#>

#> n_draw var_time var_age var_sexgender

#> 1000 time age sexTo do the simulation study, we pass the model to report_sim(). If only one model is supplied, report_sim() assumes that that model should be used as the estimation model and as the data generation model. By default report_sim() repeats the experiment 100 times, generating a different dataset each time.

set.seed(0)

res <- report_sim(mod_est = mod)

#> Warning: Large values for `lambda` used to generate Poisson variates.

#> ℹ 1.4 percent of values for `lambda` are above 1e+08.

#> ℹ Using deterministic approximation to generate variates for these values.

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

res

#> $components

#> # A tibble: 9 × 7

#> term component .error .cover_50 .cover_95 .length_50 .length_95

#> <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 (Intercept) effect -0.109 0.48 0.95 1.18 3.40

#> 2 age effect 0.119 0.54 0.98 1.46 4.23

#> 3 age hyper 0.142 0.42 0.82 0.605 2.11

#> 4 sex effect -0.0886 0.48 0.94 1.13 3.29

#> 5 time effect -0.0463 0.53 0.97 1.27 3.69

#> 6 time hyper 0.0235 0.56 0.86 0.434 1.44

#> 7 age:sex effect -0.0000223 0.473 0.949 1.43 4.14

#> 8 age:sex hyper -0.00980 0.47 0.86 0.501 1.75

#> 9 disp disp -0.0322 0.51 0.93 0.290 0.861

#>

#> $augment

#> # A tibble: 2 × 7

#> .var .observed .error .cover_50 .cover_95 .length_50 .length_95

#> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 .fitted 74.2 0.000114 0.511 0.950 0.00829 0.0240

#> 2 .expected 74.2 -25.8 0.499 0.936 38.1 135.The output from report_sim() is a list of two data frames. The first data frame contains results for parameters associated with the components() function: main effects and interactions, associated hyper-parameters, and dispersion. The second data frame contains results for parameters associated with the augment() function: the lowest-level rates parameters.

As can be seen in the results, the errors do not average out at exactly zero, 50% credible intervals do not contain the true value exactly 50% of the time, and 95% credible intervals do not contain the true value exactly 95% of the time. However, increasing the number of simulations from the default value of 100 to, say, 1000 will reduce the average size of the errors closer to zero, and bring the actual coverage rates closer to their advertised values. When larger values of n_sim are used, it can be helpful to use parallel processing to speed up calculations, which is done through the n_core argument.

One slightly odd feature of the results is that the mean for .expected is very large. This reflects the fact that the data generation model draws some extreme values. We are developing a set of more informative priors that should avoid this behavior in future versions of bage.

3 Estimation model different from data generation model

In actual applications, no estimation model ever perfectly describes the true data generating process. It can therefore be helpful to see how robust a given model is to misspecification, that is, to cases where the estimation model differs from the data generation model.

With report_sim(), this can be done by using one model for the mod_est argument, and a different model for the mod_sim argument.

Consider, for instance, a case where the time effect is generated from an AR1() prior, while the estimation model continues to use the default value of a RW() prior,

mod_ar1 <- mod |>

set_prior(time ~ AR1())

mod_ar1

#>

#> ------ Unfitted Poisson model ------

#>

#> divorces ~ age * sex + time

#>

#> exposure = population

#>

#> term prior along n_par n_par_free

#> (Intercept) NFix() - 1 1

#> age RW() age 4 4

#> sex NFix() - 2 2

#> time AR1() time 6 6

#> age:sex RW() age 8 8

#>

#> disp: mean = 1

#>

#> n_draw var_time var_age var_sexgender

#> 1000 time age sexWe set the mod_sim argument to mod_ar1 and generate the report.

res_ar1 <- report_sim(mod_est = mod, mod_sim = mod_ar1)

#> Warning: Large values for `lambda` used to generate Poisson variates.

#> ℹ 0.85 percent of values for `lambda` are above 1e+08.

#> ℹ Using deterministic approximation to generate variates for these values.

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

#> Building log-posterior function...

#> Finding maximum...

#> Drawing values for hyper-parameters...

res_ar1

#> $components

#> # A tibble: 8 × 7

#> term component .error .cover_50 .cover_95 .length_50 .length_95

#> <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 (Intercept) effect 0.171 0.54 0.97 1.18 3.40

#> 2 age effect 0.0294 0.498 0.945 1.44 4.18

#> 3 age hyper 0.0860 0.44 0.82 0.595 2.08

#> 4 sex effect -0.0389 0.55 0.965 1.14 3.29

#> 5 time effect -0.157 0.602 0.965 1.24 3.60

#> 6 age:sex effect -0.0960 0.503 0.942 1.42 4.10

#> 7 age:sex hyper -0.0317 0.51 0.92 0.473 1.62

#> 8 disp disp -0.0443 0.47 0.94 0.227 0.663

#>

#> $augment

#> # A tibble: 2 × 7

#> .var .observed .error .cover_50 .cover_95 .length_50 .length_95

#> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 .fitted 29.9 0.0000898 0.510 0.947 0.00693 0.0201

#> 2 .expected 29.9 -3.70 0.517 0.946 13.6 44.1In this case, although actual coverage for the hyper-parameters (in the components part of the results) now diverges from the advertised coverage, coverage for the low-level rates (in the augment part of the results) is still close to advertised coverage.

4 The relationship between report_sim() and replicate_data()

Functions report_sim() and replicate_data() overlap, in that both use simulated data to provide insights into model performance. Their aims are, however, different. Typically, report_sim() is used before fitting a model, to assess its performance across a random selection of possible datasets, while replicate_data() is used after fitting a model, to assess its performance on the dataset to hand.